The history of Artificial Intelligence (AI) spans several decades, tracing its evolution from early theoretical work to the groundbreaking technological advancements of the 21st century. AI refers to the ability of a digital computer or computer-controlled robot to perform tasks traditionally associated with intelligent beings. This includes processes such as reasoning, meaning discovery, generalization, and learning from experience.

The roots of AI can be traced back to the 1930s, with the pioneering work of British logician Alan Turing, whose ideas laid the foundation for much of modern AI research. Turing’s concept of a machine capable of performing any intellectual task that a human can—now known as the Turing Machine—was a seminal moment in the development of computational theory and artificial intelligence.

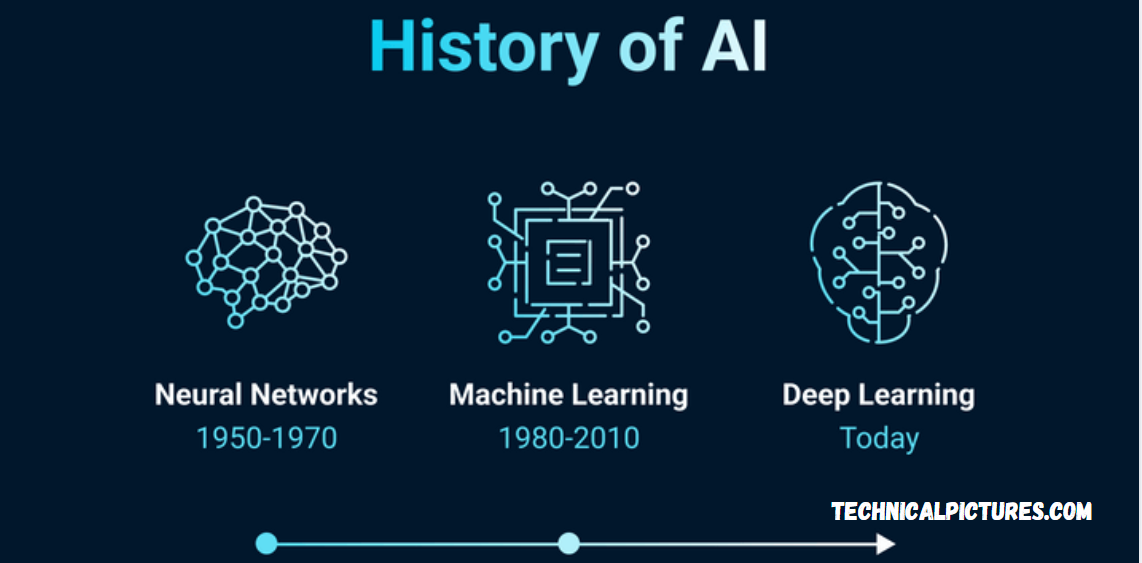

Over the years, AI evolved through a series of milestones driven by the efforts of researchers, mathematicians, and engineers. From the creation of early algorithms to the development of neural networks, AI has steadily progressed, leading to the emergence of machine learning, natural language processing, and cognitive computing in the late 20th and early 21st centuries.

As the field continues to advance, AI has moved from abstract theoretical concepts to tangible technologies shaping industries and society. The history of AI is a story of both intellectual ambition and technological innovation, reflecting humanity’s quest to understand and replicate intelligent behavior through machines.

The Early Foundations: 1930s to 1950s

The origins of AI can be traced to the early 20th century, but one of the most influential figures in its development was British mathematician and logician Alan Turing. In the 1930s and 1940s, Turing conceptualized the Turing Machine, a mathematical model of computation that would become a foundational idea for AI and computer science. In his 1950 paper, “Computing Machinery and Intelligence,” Turing posed the question, “Can machines think?” He introduced the Turing Test, a method for determining whether a machine’s behavior could mimic that of a human, a concept that remains central to AI debates today.

Turing’s work laid the groundwork for AI research, but it was not until the 1950s and 1960s that the term “Artificial Intelligence” was coined. The Dartmouth Conference in 1956, organized by computer scientists such as John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, is widely regarded as the birthplace of AI as a formal field of study. McCarthy, in particular, is credited with coining the term “Artificial Intelligence” during the conference, and it marked the beginning of a concentrated effort to create intelligent machines.

The Early AI Programs and Optimism: 1950s to 1970s

Researchers began developing the first AI programs in the late 1950s and 1960s. Early successes included Newell and Simon’s General Problem Solver (GPS), which attempted to mimic human problem-solving techniques, and ELIZA, a rudimentary natural language processing program created by Joseph Weizenbaum at MIT in the 1960s. ELIZA simulated a conversation with a psychotherapist and demonstrated the potential for machines to interact with humans using language.

Despite these initial successes, the field faced challenges. Early AI systems often struggled with practical limitations, such as limited computing power and insufficient data. However, researchers remained optimistic, believing that intelligent machines were within reach. In the 1970s, the AI community experienced an initial period of disillusionment, sometimes referred to as the “AI Winter,” as the progress of AI research slowed and funding decreased due to unmet expectations.

Expert Systems and Revival: 1980s to 1990s

The 1980s saw a revival of AI research, mainly due to the development of expert systems—AI programs designed to solve specific problems within a narrowly defined domain by emulating the decision-making ability of human experts. These systems were used in industries such as medicine, engineering, and finance. Notable expert systems from this era include MYCIN, a program developed for diagnosing bacterial infections, and XCON, which assisted in configuring computer systems.

The 1980s also saw the rise of neural networks, which are computational models inspired by the human brain. While early neural networks had limited success, they laid the foundation for the later development of deep learning algorithms in the 2000s.

The 1990s brought about significant advancements in AI, particularly in machine learning. A breakthrough moment occurred in 1997, when IBM’s Deep Blue defeated world chess champion Garry Kasparov, demonstrating the power of AI in strategic thinking and computation.

May you also like it:

Flixhqbz: A New Era in Entertainment

Modern Technology and Communication

The Age of Machine Learning and Deep Learning: 2000s to Present

As computing power grew and large datasets became more readily available, AI entered a new era marked by machine learning—a subfield of AI focused on developing algorithms that allow machines to learn from data without being explicitly programmed. The 2000s witnessed rapid progress in machine learning, especially with the advent of support vector machines, decision trees, and random forests.

A game-changer for AI came in the mid-2000s with the resurgence of neural networks and the introduction of deep learning—a more complex form of neural networks that allows for more sophisticated pattern recognition. Deep learning algorithms, which use layers of neurons to process information, have been used to make significant breakthroughs in fields such as image and speech recognition, natural language processing, and autonomous driving.

In 2012, AlexNet, a deep learning model developed by Geoffrey Hinton and his team, won the ImageNet competition by a large margin, marking a breakthrough in image recognition. This event sparked a surge of interest and investment in deep learning and AI technologies.

The 2010s also saw the rise of reinforcement learning, which involves training AI systems through rewards and punishments. This has led to remarkable achievements such as AlphaGo, Google DeepMind’s AI system that defeated world champion Go player Lee Sedol in 2016. Reinforcement learning has been applied to complex tasks, including robotics and game playing.

AI in Everyday Life

Today, AI is integrated into many aspects of daily life. From virtual assistants like Siri and Alexa to self-driving cars, AI technologies are revolutionizing industries and shaping the future of work. In healthcare, AI is used for diagnostics, personalized medicine, and drug discovery. In finance, AI algorithms are employed for trading, fraud detection, and risk assessment. AI is also making significant strides in entertainment, creating more immersive experiences through recommendation algorithms and AI-generated content.

Despite these advancements, AI still faces challenges. Issues such as ethical concerns, bias in algorithms, data privacy, and the impact of automation on jobs continue to be debated. Nevertheless, AI continues to evolve, and its potential to transform society remains immense.

Frequently Asked Questions

What is Artificial Intelligence (AI)?

AI refers to the ability of machines or computer systems to perform tasks that typically require human intelligence. These tasks include problem-solving, learning, reasoning, understanding language, and perception. AI systems are designed to mimic or simulate aspects of human intelligence.

Who is considered the father of AI?

Alan Turing, a British mathematician and logician, is often considered the father of AI. His work on the Turing Machine and the Turing Test laid the theoretical foundation for modern AI. Turing’s pioneering ideas influenced the development of computational theory and the study of machine intelligence.

When did AI research begin?

AI research began in earnest in the 1950s. In 1956, the Dartmouth Conference is considered the formal birth of AI as a scientific field. The conference brought together leading researchers such as John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, who worked to define AI and its potential applications.

What was the Turing Test, and why is it important?

The Turing Test, proposed by Alan Turing in 1950, is a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. It remains an important benchmark in discussions of AI, particularly regarding whether machines can truly “think” like humans.

What is the significance of neural networks in AI?

Neural networks, inspired by the structure of the human brain, are computational models that can learn from data. Although they have been around since the 1950s, they gained prominence in the 1980s and 2000s due to advances in computing power and the development of deep learning—a form of neural network with many layers that can recognize complex patterns.

What was the breakthrough moment in AI?

One of the major breakthrough moments in AI history came in 1997 when IBM’s Deep Blue defeated world chess champion Garry Kasparov. This victory demonstrated the power of AI in strategic decision-making and problem-solving. It was a pivotal moment that highlighted AI’s potential in complex tasks.

What is deep learning, and why is it important?

Deep learning is a subset of machine learning that uses deep neural networks to process large volumes of data. Deep learning algorithms have been key to advancements in speech recognition, image recognition, and natural language processing. Breakthroughs like AlexNet in 2012, which excelled at image recognition, demonstrated the effectiveness of deep learning in real-world applications.

Conclusion

The history of Artificial Intelligence (AI) is a testament to human ingenuity, perseverance, and the relentless pursuit of replicating the complex and dynamic nature of human intelligence. From the early theoretical groundwork laid by Alan Turing in the 1930s to the transformative breakthroughs in deep learning, neural networks, and machine learning in the 21st century, AI has evolved from abstract ideas into tangible technologies that shape our daily lives.

Throughout its history, AI has faced periods of optimism, setbacks, and periods of stagnation, such as the AI Winter. However, the resilience of researchers and the steady improvement in computational power, data availability, and algorithmic development have propelled AI to where it is today. AI systems are now integrated into various sectors, including healthcare, finance, transportation, and entertainment, revolutionizing industries and enhancing the way we live, work, and interact.